The Future of Privacy: More Data and More Choices

As I wrapped up my time at the Future of Privacy Forum, I prepared the following essay in advance of participating on a plenary discussion on the “future of privacy” at the Privacy & Access 20/20 conference in Vancouver on November 13, 2015 — my final outing in think tankery.

Alan Westin famously described privacy as the ability of individuals “to determine for themselves when, how, and to what extent information about them is communicated to others.” Today, the challenge of controlling let alone managing our information has strained this definition of privacy to the breaking point. As one former European consumer protection commissioner put it, personal information is not just “the new oil of the Internet” but is also “the new currency of the digital world.” Information, much of it personal and much of it sensitive, is now everywhere, and anyone’s individual ability to control it is limited.

Early debates over consumer privacy focused on the role of cookies and other identifiers on web browsers. Technologies that feature unique identifiers have since expanded to include wearable devices, home thermostats, smart lighting, and every type of device in the Internet of Things. As a result, digital data trails will feed from a broad range of sensors and will paint a more detailed portrait about users than previously imagined. If privacy was once about controlling who knew your home address and what you might be doing inside, our understanding of the word requires revision in a world where every device has a digital address and ceaselessly broadcasts information.

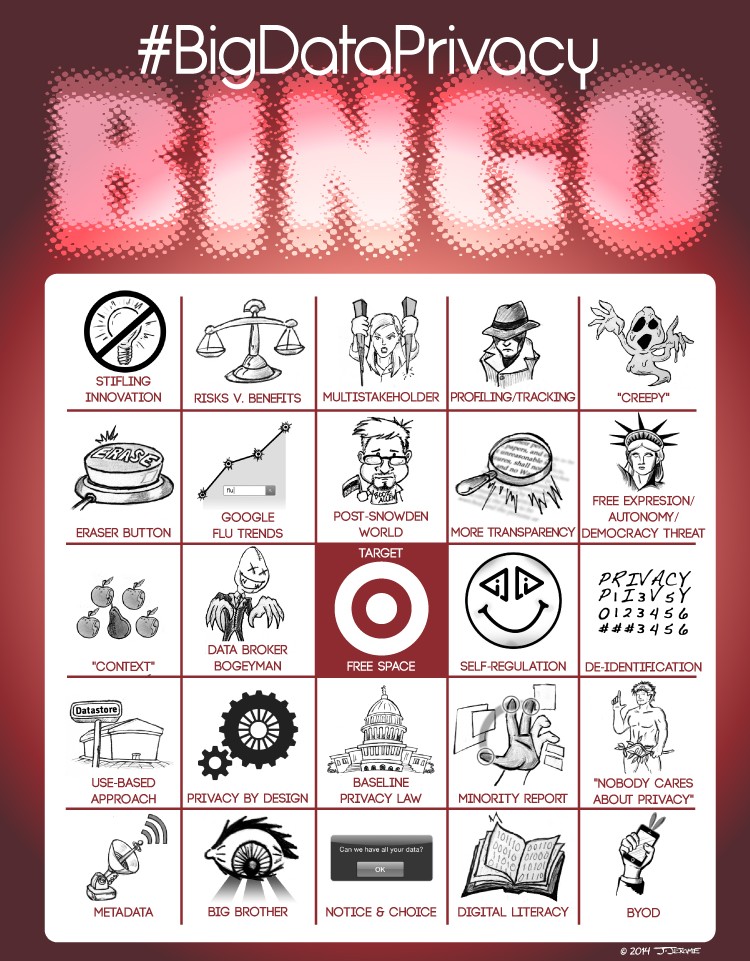

The complexity of our digital world makes a huge challenge out of explaining all of this data collection and sharing. Privacy policies must either be high level and generic or technical and detailed, each option proves of limited value to the average consumer. Many connected devices have little capacity to communicate anything to consumers or passersby. And without meaningful insight, it makes sense to argue that our activities are now subject to the determinations of a giant digital black box. We see privacy conversations increasingly shift to discussions about fairness, equity, power imbalances, and discrimination.

No one can put the data genie back in a bottle. No one would want to. At a recent convening of privacy advocates, folks discussed the social impact of being surrounded by an endless array of “always on” devices, yet no one was willing to silence their smartphones for even an hour. It has become difficult, if not impossible, to opt out of our digital world, so the challenge moving forward is how do we reconcile reality with Westin’s understanding of privacy.

Yes, consumers may grow more comfortable with our increasingly transparent society over time, but survey after survey suggest that the vast majority of consumers feel powerless when it comes to controlling their personal information. Moreover, they want to do more to protect their privacy. This dynamic must be viewed as an opportunity. Rather than dour information management, we need better ways to express our desire for privacy. It is true that “privacy management” and “user empowerment” have been at the heart of efforts to improve privacy for years. Many companies already offer consumers an array of helpful controls, but one would be hard-pressed to convince the average consumer of this. The proliferation of opt-outs and plug-ins has done little to actually provide consumers with any feeling of control.

The problem is few of these tools actually help individuals engage with their information in a practical, transparent, or easy way. The notion of privacy as clinging to control of our information against faceless entities leaves consumers feeling powerless and frustrated. Privacy needs some rebranding. Privacy must be “appified” and made more engaging. There is a business model to be made in finding a way to marry privacy and control in an experience that is simple and functional. Start-ups are working to answer that challenge, and the rise of ephemeral messaging apps are, if not perfect implementations, a sure sign that consumers want privacy, if they can get it easily. For Westin’s view of privacy to have a future, we need to do a better job of embracing creative, outside-the-box ways to get consumers thinking about and engaging with how their data is being used, secured, and ultimately kept private.